Overview

Performance testing is done to evaluate a system’s effectiveness during workloads determined by different variables. Performance testing is an essential part of any software or app development. Performance testing can be performed in different manners with variable data loads and gateways including. Spike testing, volume testing, endurance testing, configuration testing, capacity, and response testing are a few popular examples of what is performance testing.

Performance Testing Definition With Example

Performance testing is a non-functional testing that is done to define the scalability, stability, and quality of software. Performance testing is often done by several users trying to access the same resources, by feeding a high amount of data to create a spike to watch the responsiveness and performance-related issues.

Importance of Performance Testing

Performance testing provides insights into the problematic interfaces, gateways, and elements of the system to get them fixed during the early stages of development before rolling out. Different types of performance testing like stress testing and load testing are performed to find out the capacity of the system and create development strategies before rolling out the system.

Role of Performance Testing in Ensuring System Efficiency

Through performance testing, you can check the speed, response, and efficiency of the system, and software in different simulated workloads. Software performance testing helps the developers to map out the development process and strengthen the system before launching. Here are the main areas observed in performance testing in software testing:

- Identifying throughput bottlenecks

- Quantifying benchmarks and capabilities

- Validating infrastructure to support workloads

- Comparing software and hardware for optimum efficiency

- Verification of compliance with set guarantees of speed and uptime

Understanding Performance Testing

To understand what is performance testing, you will need to know why it is performed and why it’s integral for the development of software and apps. Through performance testing determining breaking points, configuration optimization, constraint removal and lastly assessing the system’s current performance is handled.

Key Metrics and Best Practices

The main things that are measured in performance testing in software are the breaking points, bottlenecks, response times, inefficiency, and capacity of elements of architecture and design. The main metrics that should be measured during performance testing are:

Throughput: The rate of handling critical tasks per second like downloading or transactions.

Resource Utilization: With multiple users trying to use the server, how well the hardware maintains it matters.

Errors & Failures: With performance testing more than usual stress can be induced in the system to determine errors and failure points during real-time use.

Concurrency: Concurrency is when simultaneous users’ systems sustain and it’s an important indicator of the system’s performance.

While designing a performance test few key things to remember beforehand are setting clear goals, creating production-like data, and creating systems to monitor holistically.

Differentiating Performance Testing vs. Performance Engineering

While understanding what is performance testing is important, you should also be aware of the differences between performance testing and performance engineering. Performance engineering is implemented early while developing the basics of the system, for more proactive assessments than performance testing. Performance testing leverages projections and profiling of architects while performance testing examines existing software builds under contention.

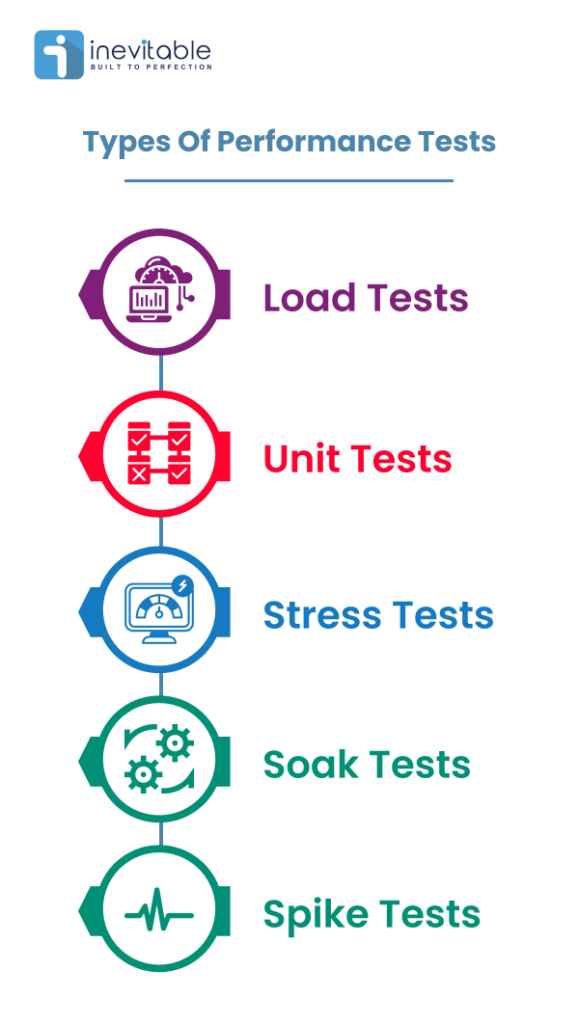

Types of Performance Tests

1. Load Tests: Load Testing simulates the number of virtual users that might use an application. In reproducing realistic usage and load conditions, based on response times, this test can help us to identify potential bottlenecks. It also enables us to understand whether it’s necessary to adjust the size of an application’s architecture.

2. Unit Tests: It simulates the transactional activity of a functional test campaign, the goal is to isolate transactions that could disrupt the system.

3. Stress Tests: Stress Testing evaluates the behavior of systems facing peak activity. These tests significantly and continuously increase the number of users during the testing period.

4. Soak Tests: It increase the number of concurrent users and monitors the behavior of the system over a more extended period. The objective is to observe if intense and sustained activity over time shows a potential drop in performance levels, making excessive demands on the resources of the system.

5. Spike Tests: These seek to understand the implications to the operation of systems when activity levels are above average. Unlike stress testing, spike testing takes into account the number of users and the complexity of actions performed (hence the increase in several business processes generated).

The Performance Testing Process

1. Identifying the Test Environment: By knowing our physical test environment, production environment, and which testing tools are available, we can understand details of the hardware, software, and network configurations which is used during testing before we begin the testing process. It will help testers create more efficient tests. It will also help identify possible challenges that testers may encounter during the performance testing procedures.

2. Setting Performance Acceptance Criteria: This includes the goals and constraints for throughput, response times, and resource allocation. It is also necessary to identify project success criteria outside of these goals and constraints. Testers should be empowered to set performance criteria and goals because often the project specifications will not include a wide enough variety of performance benchmarks. Sometimes there may be nothing at all. When it’s possible to find a similar application to compare to then it is a good way to set performance goals.

3. Planning and Designing Test Scenarios: We can determine how usage is likely to vary amongst end users and identify key scenarios to test for all possible use cases. It is necessary to simulate a variety of end users, plan performance test data, and outline what metrics will be gathered.

4. Preparing Test Environment and Tools: Then we need to prepare the testing environment before execution and also arrange the tools and other resources for doing the same.

5. Executing Performance Tests: We need to create the performance tests according to our test design and execute and monitor the tests.

6. Resolution and Retesting: Finally we need to consolidate, analyze and share test results. Then tune and test again to see if there is an improvement or decrease in performance. Since improvements generally grow smaller with each retest, stop when bottlenecking is caused by the CPU. Then we may have to consider the option of increasing CPU power which is the ultimate resolution.

Performance Testing Tools

Performance testing tools are essential for evaluating the speed, responsiveness, scalability, and stability of a system under various conditions. These tools help in identifying performance bottlenecks, measuring system behavior, and ensuring that applications or systems meet performance expectations. Here’s an overview of common performance testing tools:

LoadRunner: A widely used tool by Micro Focus for performance testing. It supports various protocols, allowing simulation of real user behavior. LoadRunner helps in measuring system performance under load, stress, and other conditions.

JMeter: An open-source tool by Apache, primarily used for load testing. It’s Java-based and offers a user-friendly interface for creating and executing test plans to measure performance for different protocols and applications.

Gatling: Another open-source tool, Gatling is designed for load testing. It’s based on Scala and provides a high-performance engine to simulate thousands of users. Gatling’s scripting is done in a DSL (Domain Specific Language) that’s easy to learn.

Apache Benchmark (ab): A command-line tool for measuring the performance of web servers. It’s simple and lightweight, allowing users to send multiple requests to a server and measure the time taken to process these requests.

NeoLoad: A tool by Neotys for load and performance testing of web and mobile applications. It offers features for creating scenarios, test scripting, and analyzing system behavior under various conditions.

BlazeMeter: Acquired by CA Technologies, now Broadcom, BlazeMeter offers performance testing in the cloud. It allows for running load tests, performance tests, and stress tests using an intuitive interface.

Visual Studio Load Test: Part of Microsoft’s Visual Studio Enterprise, it allows users to create and run load tests on web applications. It integrates well with Visual Studio and Azure, making it suitable for. NET-based applications.

AppDynamics: While primarily known for application performance monitoring, AppDynamics also provides capabilities for performance testing. It helps in understanding application behavior and performance metrics under load.

Locust: An open-source load-testing tool that allows you to write test scenarios in Python. It’s scalable, allows distributed load generation, and offers an intuitive UI for test monitoring.

These tools vary in terms of their features, supported technologies, ease of use, scalability, and pricing. The choice of tool often depends on the specific requirements, such as the type of application, technology stack, scalability needs, and budget constraints.

Performance Testing Metrics and Success Criteria

Understanding Performance Testing Metrics

Performance testing metrics are crucial for gauging the effectiveness and health of a system under various loads. Here’s a brief overview of key performance testing metrics:

Response Time: This measures the time taken for the system to respond to a user request. It includes network latency, server processing time, and client-side rendering time.

Throughput: Throughput denotes the number of transactions processed by the system per unit of time. It helps determine the system’s capacity and performance under load.

Error Rate: This metric tracks the number of errors encountered during testing. It includes server errors, timeouts, or other issues that impact user experience.

Concurrency: Refers to the number of users or transactions that the system can handle simultaneously without performance degradation. It indicates system stability under load.

Resource Utilization: Monitoring CPU, memory, disk usage, and network bandwidth helps understand how system resources are utilized during various test scenarios.

Latency: Latency measures the time taken for data to travel from its source to its destination. High latency can affect user experience, especially in distributed systems.

Peak Response Time: Unlike average response time, peak response time identifies the maximum time taken for a transaction under stress conditions. It helps pinpoint worst-case scenarios.

Scalability: This metric assesses how well the system can handle increased load by adding resources, users, or data without compromising performance.

Stress Metrics: Including maximum load capacity, breaking point, and how the system behaves beyond its capacity, these metrics help determine system limitations.

Endurance or Soak Testing Metrics: These measure system stability over an extended period under a consistent load, identifying memory leaks or other issues over time.

Understanding these metrics helps in analyzing system behavior, identifying performance bottlenecks, and making informed decisions to optimize and improve the system’s performance. Different tools and techniques are used to measure and track these metrics during performance testing.

Characteristics of Effective Performance Testing

A system’s performance is thoroughly assessed and validated through the use of numerous essential components in effective performance testing. Here are a few essential qualities:

Clear objectives: It’s critical to establish precise objectives for performance testing. Determining response times, throughput, scalability bounds, or system stability under load are a few examples of these goals.

Realistic Scenarios: It is essential to create test scenarios that closely resemble actual usage patterns and circumstances. It aids in comprehending how the technology functions in potential user scenarios.

Repeatability: To guarantee consistency and dependability of outcomes, tests must be repeatable. Rerunning tests under identical circumstances facilitates the verification of performance gains or the detection of regressions.

Scalability testing: It’s critical to determine whether the system can withstand higher loads brought on by the addition of users or resources. Testing for scalability aids in assessing how well the system can expand to accommodate changing needs.

Comprehensive Coverage: Testing a range of performance parameters, such as load, stress, endurance, and spike testing, guarantees extensive coverage of the behavior of the system under diverse circumstances.

Monitoring and Analysis: Throughout tests, ongoing monitoring and analysis of performance indicators provide insights into the behavior of the system and make it possible to spot bottlenecks or potential improvement areas.

Early Integration: By carrying out performance testing early in the development process, performance problems can be found and fixed more affordably and quickly.

Real-time Reporting: It’s essential to submit test results accurately and on time. It facilitates timely and informed decision-making by stakeholders, effectively resolving performance challenges.

Collaboration and Communication: Developers, testers, and other stakeholders must work together to conduct effective performance testing. It is ensured that everyone is aware of the test’s goals and outcomes through clear communication.

Feedback Loop for Improvement: Iterative performance testing that incorporates user feedback is necessary to continuously improve test scenarios and methodology.

Tool Selection and Expertise: It’s critical to select the right instruments and possess the know-how to use them efficiently. The technological stack and specifications of the system should be supported by the tools that are chosen.

Adhering to these characteristics ensures that performance testing is not just a routine exercise but a strategic approach to validate, optimize, and enhance a system’s performance and reliability.

Key Success Metrics

Key success metrics in performance testing can vary based on the specific objectives and nature of the system being tested. However, several fundamental metrics are commonly used to gauge the success of performance testing efforts:

Response Time: The time taken for the system to respond to a user request. It directly impacts user experience and is a crucial metric in determining system performance.

Throughput: The rate at which the system processes transactions or requests per unit of time. It indicates the system’s capacity and efficiency under load.

Error Rate: The frequency of errors encountered during testing. It includes server errors, timeouts, or other issues impacting user experience and system stability.

Resource Utilization: Monitoring CPU, memory, disk usage, and network bandwidth helps understand how efficiently system resources are utilized. Balancing resource usage is crucial for optimal performance.

Scalability Limits: Determining the maximum load the system can handle before performance degrades significantly. It indicates the system’s ability to grow and accommodate increased demand.

Stability under Load: Assessing how the system behaves when subjected to sustained loads over time. Stability metrics reveal if the system can maintain performance without deteriorating.

Peak Performance Metrics: Identifying maximum response times, throughput, and resource usage under peak loads. It helps understand worst-case scenarios and plan for capacity accordingly.

Latency: The delay in data transmission or processing. Low latency is crucial, especially in systems where real-time responsiveness is essential.

Reliability and Availability: Ensuring the system remains available and reliable under varying loads. Metrics related to uptime, downtime, and failure recovery times are essential.

Customer Satisfaction or User Experience Metrics: Feedback from users or stakeholders on system performance. This qualitative data complements quantitative metrics, reflecting actual user satisfaction.

The selection of these metrics depends on the nature of the application, user expectations, and business requirements. An effective combination of these metrics provides a holistic view of the system’s performance and helps in identifying areas for improvement.

Automation in Performance Testing

Advantages of Automating Performance Testing

Automating performance testing offers several advantages that significantly enhance the testing process and overall system quality:

Repeatability and Consistency: Automated tests guarantee consistency in test execution by enabling precise repetition of the same test scenarios, which lowers the possibility of human mistakes and results variability.

Efficiency and Time Savings: Automation greatly accelerates the testing procedure. When opposed to manual testing, it allows for the simultaneous or sequential execution of several tests, saving time.

Scalability: Scalability testing is made possible by automated technologies that can replicate a high volume of users or transactions, something that manual testing may not be feasible or feasible for.

Increased Test Coverage: Automated tests can evaluate a greater variety of scenarios, such as load, stress, and endurance testing, and so offer a more thorough evaluation of the performance of the system.

Early Performance Issue Discovery: Performance tests can be automated into the development process to enable early performance issue discovery. This facilitates speedier issue resolution and lowers the cost of correcting issues later in the cycle.

Regression Testing: Continuous integration and continuous deployment (CI/CD) pipelines can incorporate automated performance tests, allowing for frequent regression testing to make sure that code modifications or performance enhancements don’t negatively impact system performance.

Data-driven testing provides insights into performance under multiple scenarios by allowing diverse datasets and settings to be tested systematically.

Resource Optimization: By planning and carrying out tests during off-peak hours, automated testing can maximize test coverage and optimize resource utilization.

Performance Monitoring: Testers and developers can measure performance indicators and instantly assess results with the help of certain automation solutions that provide real-time monitoring and reporting capabilities.

Cost-Effectiveness: By optimizing workflows and raising productivity, automated performance testing eventually lowers testing expenses, even though the initial setup may need a financial outlay.

Encourages Collaboration: By offering a standard structure and vocabulary for talking about and resolving performance issues, automation encourages collaboration between the development, testing, and operations teams.

In conclusion, automated performance testing greatly enhances the effectiveness, dependability, and thoroughness of testing endeavors, enabling teams to detect and resolve performance concerns at an early stage of the development process.

Enhancing Agility Through Automation

Automation is essential for improving agility in several software development and operational process domains. This is how it helps to improve agility:

Faster Time-to-Market: Tasks like configuration management, testing, and deployment are accelerated by automated processes. Because of this acceleration, software updates and goods can reach the market more quickly. It also shortens development cycles.

Continuous Integration and Continuous Deployment (CI/CD): Automated tools make code changes easier to integrate, test, and roll out. Build, test, and deployment phases are automated by CI/CD pipelines, enabling frequent and dependable releases.

Effective Testing: Testing is accelerated by automation, which includes unit, integration, and end-to-end testing. This quick feedback loop facilitates quick iterations and early bug detection, enhancing program quality.

Resource Optimization: Automation makes optimal use of infrastructure resources to optimize resource allocation. Auto-scaling, containerization, and dynamic provisioning all assist in balancing resources against changing demand.

Decreased Manual Errors: Automated procedures reduce the possibility of human error in routine operations, guaranteeing precision and uniformity in testing, configurations, and deployments.

Streamlined Operations: Routine operational chores like logging, monitoring, and incident response are made easier by automation. Remedial procedures and automated alerts improve system dependability and minimize downtime.

Flexibility and Adaptability: Automated solutions offer the flexibility required in agile organizations by being easily scaled depending on changing company needs or requirements.

Cross-Functional Collaboration: By offering a shared platform and established procedures, automation technologies promote cooperation between cross-functional teams. Quick decision-making and feedback are made possible by this teamwork.

Boosting DevOps Practices: Development and operations teams work together more when automation is a core component of the DevOps culture. It fosters a culture of continual development, shared duties, and goal alignment.

Data-Driven Decision Making: By gathering and analyzing data, automation solutions offer insightful information that helps in decision-making. Performance optimization and strategic decision-making are aided by metrics and analytics obtained through automated processes.

Organizations can get enhanced agility by utilizing automation throughout the software development lifecycle. This enables them to promptly react to market fluctuations, produce software of superior quality at a faster pace, and effectively adjust to changing client demands.

Advanced Topics in Performance Testing

Cloud Performance Testing

Performance testing can also be done on the cloud by developers. The advantage of cloud performance testing is that it can test apps more extensively while keeping the financial advantages of being in the cloud.

Initially, companies believed that transferring performance testing to the cloud would simplify and increase the scalability of the process. They reasoned that by shifting the process to the cloud, all of their issues would be resolved. But as soon as companies started doing this, they discovered that there were still problems with performing performance testing in the cloud because the company wouldn’t have comprehensive, white box information on the part of the cloud provider.

Being complacent when transferring an application from an on-premises setting to the cloud is one of the difficulties. IT personnel and developers might believe that an application remains functional even after it is moved to the cloud. They can move forward with a speedy rollout while minimizing testing and quality assurance. Testing may not be as accurate as on-premises testing because the program is being tested on hardware from a different manufacturer. Teams in charge of development and operations should map servers, ports, and pathways, do load testing, evaluate scalability, look for security flaws, and take user experience into account. When migrating an application to the cloud, inter-application communication may be one of the main problems. Compared to on-premises systems, cloud platforms usually impose tighter security constraints on internal communications. Before migrating an application to the cloud, a business should create a comprehensive map of all the servers, ports, and communication pathways the application uses. Monitoring performance might also be beneficial.

Addressing Performance Testing Challenges

Some challenges within performance testing are as follows:

- Some tools may only support web applications.

- Free variants of tools may not work as well as paid variants, and some paid tools may be expensive.

- Tools may have limited compatibility.

- It can be difficult for some tools to test complex applications.

Organizations should watch out for performance bottlenecks in the following:

- CPU

- Memory

- Network utilization

- Disk usage

- OS limitations

Other common performance problems may include the following:

- Long load times

- Long response times

- Insufficient hardware resources

- Poor scalability

Conclusion

In Software Engineering, Performance testing is necessary before marketing any software product. It ensures customer satisfaction & protects an investor’s investment against product failure. Costs of performance testing are usually more than made up for with improved customer satisfaction, loyalty, and retention.

By Nilofar Jargela

I am co-founder & CEO at Inevitable Infotech – IT service provider. Established in 2018, Inevitable Infotech is the brainchild of technocrats having over a decade’s experience in building smart IT solutions.